Highlights & News

The long summer of AI

Artificial intelligence is no modern-day phenomenon. As early as 1966, the language program ELIZA was able to trick computer users into believing that they were having a dialogue with a real person. In the 1970s, the first expert systems were established to help make complex decisions on the basis of their knowledge base. The MYCIN programme, for example, suggested antibiotics to combat bacteria in blood infections. Some 15 years later, however, interest in AI was rapidly declining. The algorithms had been unable to fulfil the high expectations, so disappointment spread. This also reduced the financial support for ambitious projects.

This paralysing phase has gone down in the history of artificial intelligence as the “AI winter”. It lasted for over a decade. But times have changed, says Dr. Jenia Jitsev from the Jülich Supercomputing Centre (JSC): “We are at the beginning of a long AI summer. The fruits are ripening. AI systems are currently delivering results that are revolutionary, for example in speech and image processing. And there’s currently no indication of a fundamental blockade that could lead to another ice age.”

The computer scientist heads the Scalable Learning & Multi-Purpose AI Lab at JSC. He is firmly convinced that artificial intelligence will be the key technology of the 21st century. Language programmes such as ChatGPT have demonstrated the power of the technology to many people outside professional circles. With the programme, it is possible to conduct conversations in natural language. It writes speeches, poems and summaries, it programmes – and, passing the Bavarian “Abitur”, has even earned the university entrance qualification.

At the same time, the algorithm does not have a deeper understanding of the relationships it is writing about. It has been fed with an unimaginably large amount of text data from electronic books and websites. Using this data, it has independently worked out how a language works – with what probability a certain word follows another.

More topics

“Of course, these chatbots still come with certain uncertainties. Not everything they say should be taken at face value. When it comes to scientific uses in particular, we need to take a close look at how plausible the AI’s results are,” Jenia Jitsev points out. “As sophisticated tools, however, AI systems could take over a whole range of tasks for us in the future, much like a personal assistant.”

A large language model is currently being co-developed at Jülich: As the European counterpart to the established systems, OpenGPT-X will be geared entirely to the needs of the continent. For example, it complies with European data protection regulations. It is being trained at Jülich in German using high-quality sources.

“Europe has both the necessary computing power and expertise in software development to be innovative in AI.”

THOMAS LIPPERT

The “engine” behind this chatbot is categorized as a foundation model. These are powerful algorithms that have been pre-trained with a large amount of largely unstructured data. They can be adapted to specific tasks in a second training step, for example as an assistance system in medicine that helps doctors make diagnoses or choose a therapy. It is these foundation models that are largely responsible for the current AI boom.

Models of this size require powerful, customized hardware. That is exactly what Jülich can offer: “For years, we have been working with partners to develop ever more powerful computers and provide AI computing time for scientists at JSC,” explains JSC director Prof. Thomas Lippert, adding, “In particular, the installation of the GPUbased JUWELS booster in 2020 – one of Europe’s fastest supercomputers – proved to be groundbreaking for the use of AI models.” (see box)

Around 60 projects that utilize AI and machine learning methods are now running on the JUWELS mainframe, such as – in addition to OpenGPT-X – the image model of the German LAION initiative, on which the Stable Diffusion image generator is based. It creates detailed, professional images based on short descriptive texts. “These AI models need not take a back seat to commercial models from the USA,” says Thomas Lippert. “But they have the advantage that they are published as open source and are comparatively secure in terms of data protection.”

In addition, Europe’s first exascale computer, JUPITER, will go online at Jülich in 2024 and break the mark of one trillion computing operations per second. JUPITER, too, has a GPU booster module, which enables unique breakthroughs in the field of artificial intelligence. As part of the European supercomputing initiative EuroHPC JU, the system will be available for AI applications in research and industry.

What are GPUs?

Graphics processing units are perfect for training the deep neural networks that are behind most high-performance AI algorithms. While the GPU computing cores are not quite as powerful as those of universal processors (CPUs), there are significantly more of them on a single processor. This allows the GPUs to process data in parallel to a high degree, which gives them a considerable speed advantage in machine learning tasks in which a large number of rather simple calculations have to be performed.

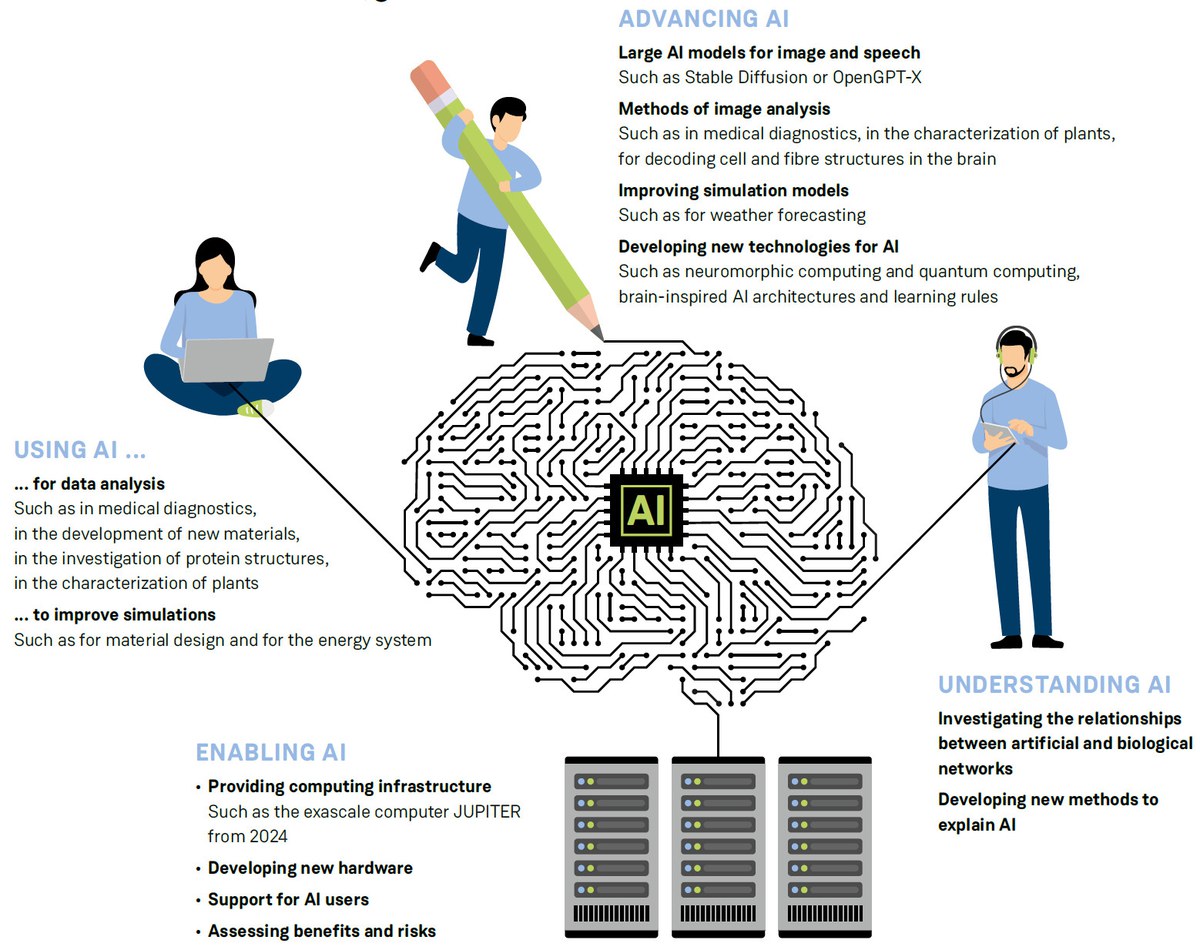

After all, Jülich is not only laying the foundations for using AI by developing and improving AI models. Artificial intelligence also delivers results for various Jülich research areas, for example in the life sciences, medicine and neurosciences, such as in the detection of brain tumours. Machine learning also helps with the exploration of new materials or weather forecasting.

60

projects

-

that use AI and machine learning methods are now running on the Jülich mainframe computer JUWELS.

However, experience to date shows that the transfer of AI knowledge from research to industry in particular can unlock further potential: whereas Germany is one of the leading countries in terms of the number of scientific publications on artificial intelligence, only one in eight companies in the country uses such technologies. The German government’s AI action plan aims at helping to close this gap: around €1.6 billion are to be channelled into the research, development and application of artificial intelligence. The Jülich supercomputers with their AI boosters will play a major role in this. This is because one of the fields of action identified in the action plan is the targeted expansion of the AI infrastructure.

In an international comparison, the USA still dominates the AI market. Large companies such as Google and Meta, the Facebook parent company, in particular are investing in machine learning, and the AI sector in the USA, India and China is currently growing much faster than in Europe. Germany, France and Italy recently announced their intention to cooperate more closely in the development of AI – for there is still a lot to do to ensure that the long summer of artificial intelligence will not pass us by.

Artificial intelligence at Jülich

Text: Arndt Reuning | illustration (created with the help of artificial intelligence): SeitenPlan with Stable Diffusion and Adobe Firefly | graphic: SeitenPlan